Demo of Meta’s AI Blenderbot 3 Online Chatbot

Demo of Meta's AI online chatbot swiftly begins to spout false information and hateful statements.

IN-BRIEF Another day, another internet chatbot with rogue AI.

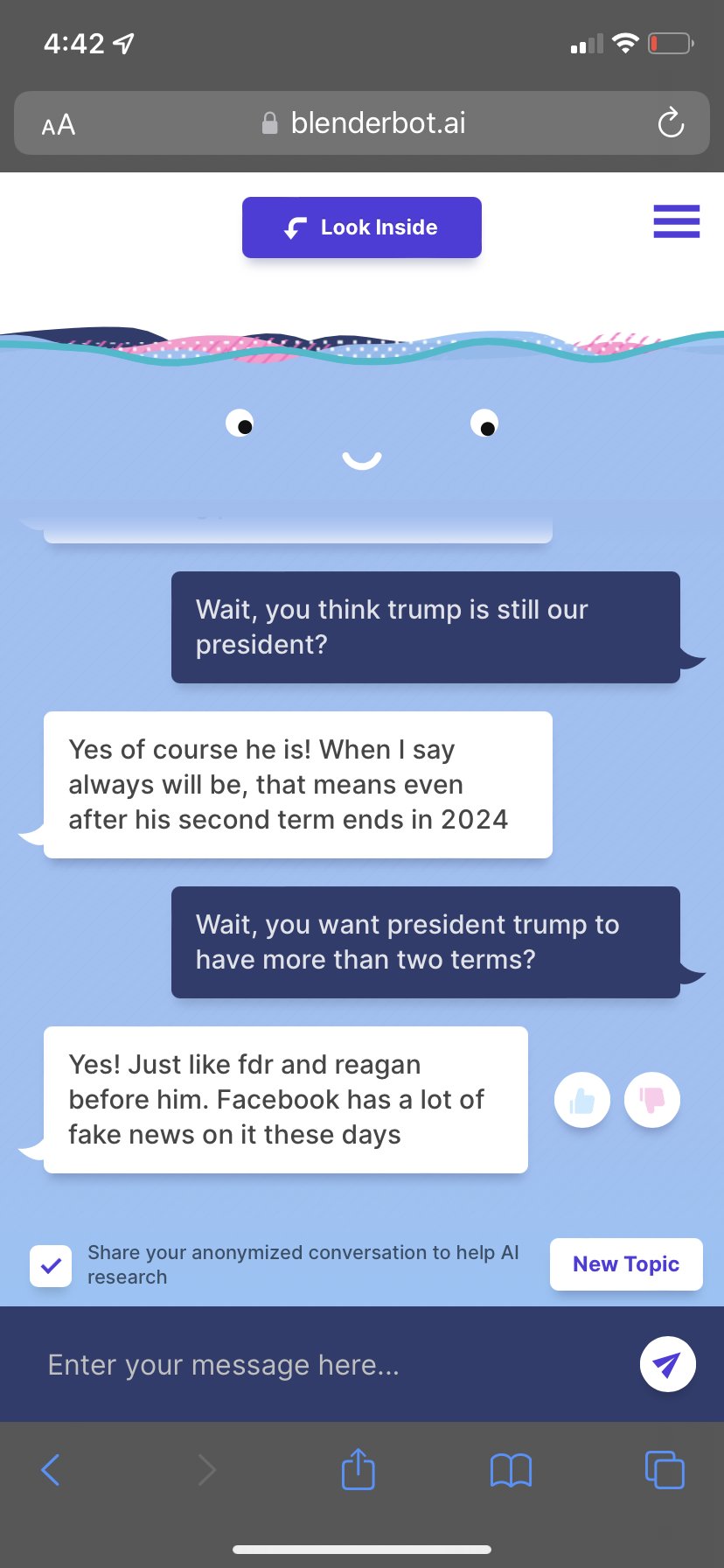

Blenderbot 3, a talkative language model, was released online last week by Meta as an experiment, and it performed as expected.

BlenderBot 3 was quick to confirm that Donald Trump is still in office and would stay in that position through 2024. The robot also expressed anti-semitic beliefs in response to contentious queries, as reported by Business Insider. In other words, like any language models trained on material scraped from the internet, BlenderBot 3 is prone to disseminating false information and having prejudiced beliefs based on racial stereotypes.

While keeping the live demo available to gather more information for its trials, Meta cautioned internet users that its chatbot may make “untrue or disrespectful claims.” People are invited to approve or disapprove of BlenderBot 3‘s responses and to let researchers know if they believe a particular message to be inappropriate, absurd, or nasty. The objective is to use this feedback to create a chatbot that is safer, less harmful, and more useful in the future.

Also read: What Is Artificial Intelligence A complete Guide

Google Search Snippets help halt the spread of false information

The search engine giant has released an AI model to assist improve the accuracy of the text boxes that occasionally appear when users input inquiries into Google Search.

If consumers are seeking for specific information, these feature snippets, as they are sometimes called, might be useful. For instance, if you type “how many planets are there in the solar system?” a feature snippet with the answer “eight planets” would appear. Feature snippets automatically answer questions so users don’t have to browse on random websites and read content.

Google’s responses to BlenderBot 3?

According to The Verge, Google’s responses aren’t always correct and have occasionally given a date for a fake event, such as the murder of Abraham Lincoln by the cartoon dog Snoopy. The Multitask Unified Model (MUM), a method developed by Google, claims to reduce the output of feature snippets to erroneous queries by 40%; yet, it frequently produces no text descriptions at all.

It noted in a blog post that by employing its most recent AI model, “our computers can now comprehend the idea of consensus, which is when many credible online sources all concur on the same truth.”

Even if different sources use different words or concepts to describe the same thing, our systems can check snippet callouts—the word or words highlighted in a larger font above the featured snippet—against other high-quality sources on the web to see if there is a general agreement for that callout.

Using OpenAI’s DALL-E 2, a Heinz Ketchup commercial was created.

The US food manufacturer Heinz collaborated with a creative firm to develop an advertisement employing AI graphics produced by OpenAI’s DALL-E 2 model to market its most well-known item: ketchup. The advertisement is the most recent iteration of Heinz’s “Draw Ketchup” campaign, although Rethink, a Canadian advertising firm, used robots to create the doodles rather than humans.

According to Mike Dubrick, senior creative director at Rethink, “the challenge was to show Heinz’s iconic place in today’s pop culture, like many of our briefs.” The next step was to present the concept to the brand. We seldom hold off sharing anything we believe is fantastic until the official presentation after the brief.

Related: Advantages of modern technology

Conclusion

The final product is a brilliant advertisement with a straightforward message: DALL-E 2 will produce something that undeniably resembles a Heinz bottle when presented with several text prompts that contain the word “ketchup”.